A research team, affiliated with UNIST has unveiled a novel technique that promises to make training 3D object recognition AI models much more efficient, without sacrificing accuracy. This development could dramatically cut down the time and computational resources needed to build advanced AI systems used in autonomous vehicles, robotics, and digital twins.

Professor Jae-Young Sim and his research team from the Graduate School of Artificial Intelligence at UNIST have created a novel method to compress 3D point cloud data—the type of data used to represent objects in three dimensions — making it easier and faster for AI models to learn from these datasets.

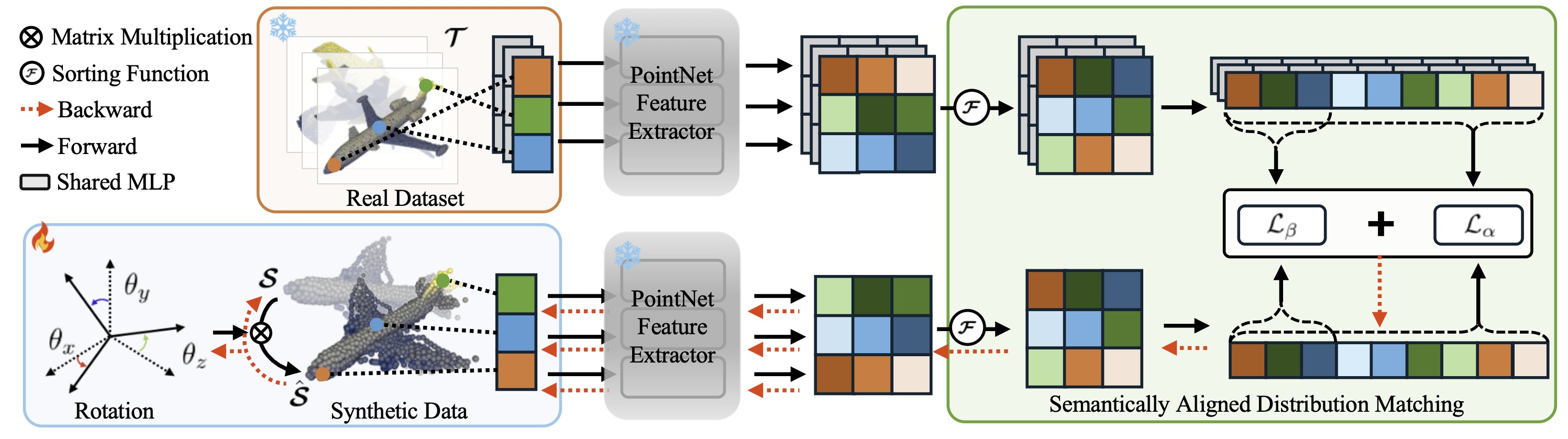

Data distillation is a technique that distills large datasets into smaller, essential summaries, enabling faster training. While this approach has been explored for images and text, applying it to 3D point clouds has been particularly difficult. Point clouds represent objects with a set of points, but these points are unordered and often rotated in different ways, complicating the process of creating a compact, accurate summary.

Traditional methods struggle because they rely on comparing features between original and synthetic data, but the unordered and rotated nature of point clouds makes this comparison unreliable. This often results in summaries that misalign parts or even misidentify objects.

To address this, the research team developed an innovative approach that includes a new loss function—Semantically Aligned Distribution Matching (SADM)—which automatically sorts points based on their meaning, and a learnable rotation mechanism that allows the model to optimize the orientation of objects during training.

Figure 1: The overall framework of the proposed dataset distillation method for 3D point clouds.

Figure 1: The overall framework of the proposed dataset distillation method for 3D point clouds.

Experimental results show that their technique can reduce datasets by up to 96% (i.e., compressing data to just 4% of the original size) while maintaining high accuracy. For example, models trained on only a quarter of the original data achieved over 80% recognition accuracy on the ModelNet40 dataset, close to the nearly 88% accuracy achieved with full data.

Professor Sim explained, “This method tackles the core issues caused by the unordered structure and rotational variations of 3D point clouds. It opens the door to faster, more cost-effective training for applications like autonomous driving, drones, robotics, and digital representations of real-world environments.”

The study has been accepted for presentation at the 39th annual conference on Neural Information Processing Systems (NeurIPS 2025), one of the most respected conferences in artificial intelligence research. This research was supported by the National Research Foundation (NRF) of Korea and the Institute for Information & Communications Technology Planning & Evaluation (IITP).

Journal Reference

Jae-Young Yim, Dongwook Kim, and Jae-Young Sim, “Dataset Distillation of 3D Point Clouds via Distribution Matching,” (2025).