With the advancement of artificial intelligence (AI), the issue of user privacy infringement has become increasingly serious. Federated learning (FL) has emerged as a core technology to address this challenge, especially in the realm of on-device AI that has garnered significant interest from IT companies.

Professor Sung Whan Yoon and his team in the Graduate School of Artificial Intelligence at UNIST have developed a groundbreaking technology, called FedGF (Federated Learning for Global Flatness) that enhances AI performance while safeguarding personal information. This innovation is poised to tackle the problem of user data leakage effectively.

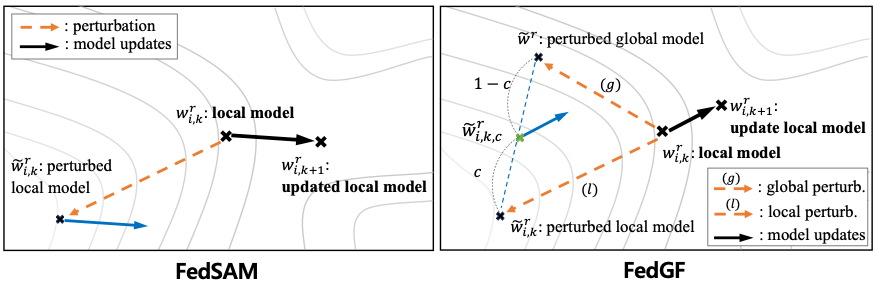

Figure 1. Schematic image, describing the federated learning algorithm.

The research team has created a method that consistently delivers high performance across diverse user data distributions. Unlike existing technologies, which perform well only in environments similar to the training data, FedGF maintains its efficacy across various scenarios.

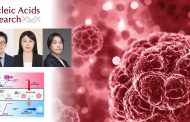

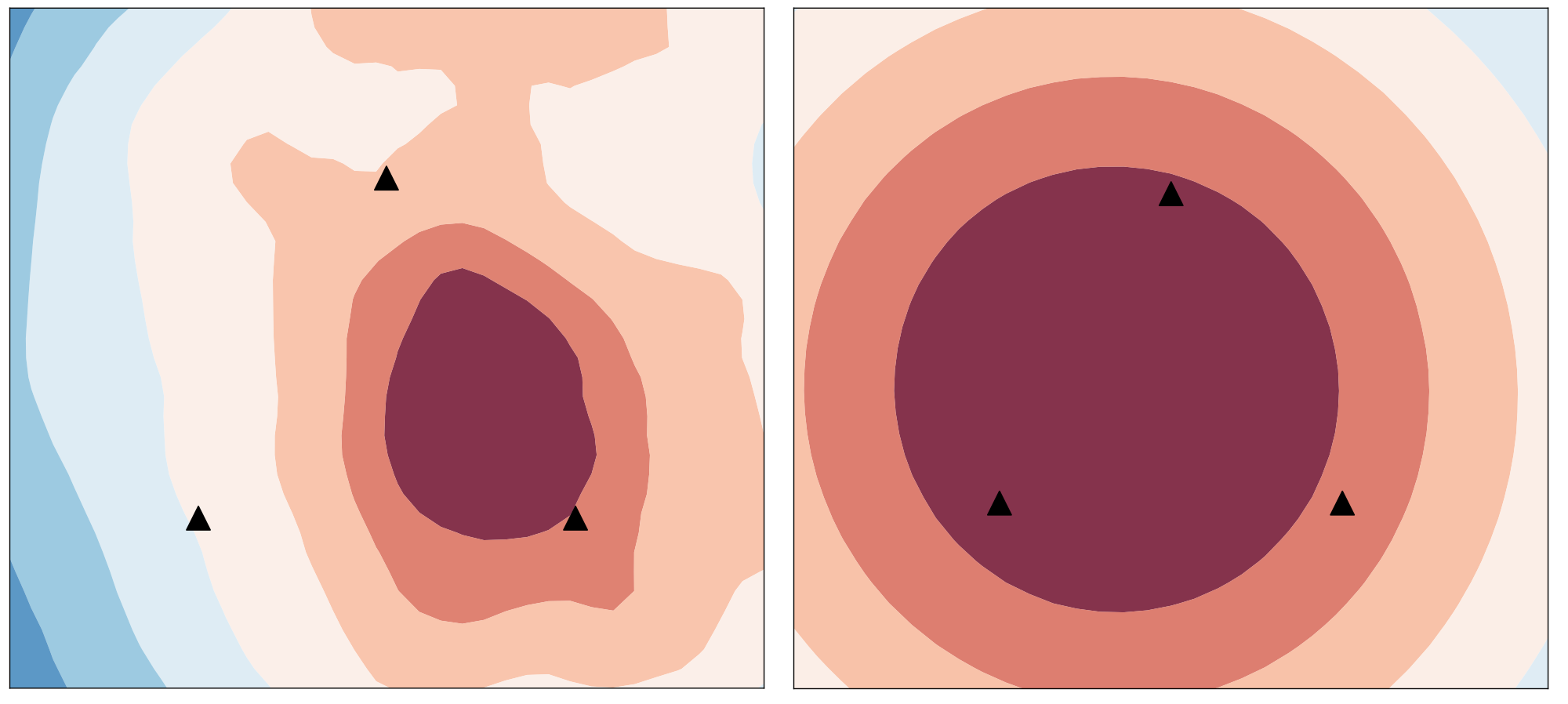

Figure 2: Visualization of the loss surface of FedSAM for the CIFAR-100 case (α = 0).

Federated learning (FL) protects personal information by training deep learning models directly on user devices. However, its effectiveness can be hampered by data discrepancies among devices. FedGF achieves high accuracy by optimizing models based on the local training from each device, all without transmitting sensitive data to a central server.

Figure 3. Comparison between the existing method (left) and the proposed algorithm (right).

In addition to its robustness, FedGF is highly efficient, requiring fewer communication resources than traditional methods. This efficiency is particularly beneficial for mobile devices that rely on wireless communication, such as Wi-Fi.

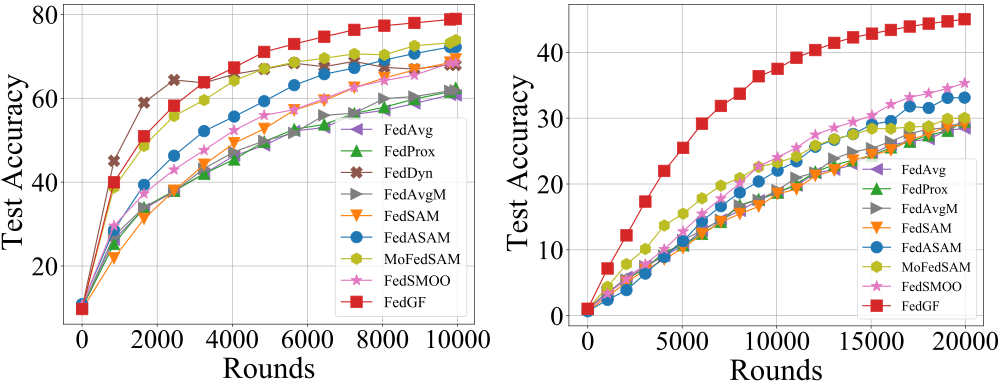

Figure 4. Performance of the proposed algorithm (FedGF: Red Box)

Professor Yoon stated, “Federated learning will serve as a crucial foundation in addressing AI privacy infringement.” He added, “It will significantly assist major IT companies in overcoming challenges related to personal information and data heterogeneity.”

First author Taehwan Lee remarked, “With FedGF technology, companies can develop high-performance AI models without infringing on personal information, thus paving the way for advancements in various fields such as IT, healthcare, and autonomous driving.”

Figure 5. Loss surface of FedGF for CIFAR-100 (α = 0).

The research findings were published online on July 20 at the International Conference on Machine Learning (ICML). This project was conducted as part of an international collaboration on information protection, supported by the Ministry of Science and ICT (MSIT), as well as an initiative for training talent in the fields of information and communication broadcasting.

Journal Reference

Taehwan Lee and Sung Whan Yoon, “Rethinking the Flat Minima Searching in Federated Learning,” ICML, (2024).