A research team, led by Professor Jaejun Yoo from the Graduate School of Artificial Intelligence at UNIST has announced the development of an advanced artificial intelligence (AI) model, ‘BF-STVSR (Bidirectional Flow-based Spatio-Temporal Video Super-Resolution),’ capable of simultaneously improving both video resolution and frame rate.

Resolution and frame rate are critical factors that determine video quality. Higher resolution results in sharper images with more detailed visuals, while increased frame rates ensure smoother motion without abrupt jumps.

Traditional AI-based video restoration techniques typically handle resolution and frame rate enhancement separately, relying heavily on pre-trained optical flow prediction networks for motion estimation. Optical flow calculates the direction and speed of object movement to generate intermediate frames. However, this approach involves complex computations and is prone to accumulated errors, limiting both the speed and quality of video restoration.

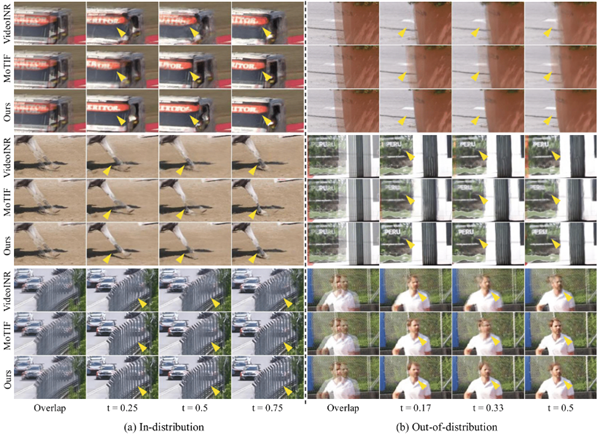

In contrast, ‘BF-STVSR‘ introduces signal processing methods tailored to video characteristics, enabling the model to learn bidirectional motion between frames independently, without dependence on external optical flow networks. By jointly inferring object contours and motion flow, the model effectively enhances both resolution and frame rate simultaneously, resulting in more natural and coherent video reconstruction.

Applying this AI model to low-resolution, low-frame-rate videos demonstrated superior performance compared to existing models, as evidenced by higher Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM) scores. Elevated PSNR and SSIM values indicate that even videos with significant motion retain clear, undistorted human figures and details, producing more realistic results.

Professor Jaejun Yoo explained, “This technology has broad applications, from restoring security camera footage or black-box recordings captured with low-end devices to quickly enhancing compressed streaming videos for high-quality media content. It can also benefit fields such as medical imaging and virtual reality (VR).”

This research was led by first author Eunjin Kim, with Hyeonjin Kim serving as co-author. Their findings have been accepted for presentation at the 2025 Conference on Computer Vision and Pattern Recognition (CVPR), one of the most prestigious conferences in the field of computer vision. Held in Nashville, USA, from June 11 to 15, CVPR received 13,008 submissions, with only 22.1% (2,878 papers) accepted.

The project was supported by the Ministry of Science and ICT (MSIT), the National Research Foundation of Korea (NRF), the Institute for Information & Communications Technology Planning & Evaluation (IITP), and the UNIST Supercomputing Center.

Journal Reference

Eunjin Kim, Hyeonjin Kim, Kyong Hwan Jin, Jaejun Yoo, “BF-STVSR: B-Splines and Fourier-Best Friends for High Fidelity Spatial-Temporal Video Super-Resolution,” CVPR 2025, (2025).