Researchers at UNIST have announced the development of a groundbreaking artificial intelligence (AI) technology capable of reconstructing three-dimensional (3D) representations of unfamiliar objects manipulated with both hands, as well as simulated surgical scenes involving intertwined hands and medical instruments. This advancement enables highly accurate augmented reality (AR) visualizations, further enhancing real-time interaction capabilities.

Led by Professor Seungryul Baek of the UNIST Graduate School of Artificial Intelligence, the team introduced the BIGS (Bimanual Interaction 3D Gaussian Splatting), an innovative AI model that can visualize complex interactions between hands and objects in 3D using only a single RGB video input. This technology allows for the real-time reconstruction of intricate hand-object dynamics, even when the objects are unfamiliar or partially obscured.

Traditional approaches in this domain have been limited to recognizing only one hand at a time or responding solely to pre-scanned objects, restricting their applicability in realistic AR and VR environments. By contrast, BIGS can reliably predict full object and hand shapes, even in scenarios where parts are hidden or occluded, and can do so without the need for depth sensors or multiple cameras—relying solely on a single RGB camera.

The core of this AI model is based on 3D Gaussian Splatting, a technique that represents object shapes as a cloud of points with smooth Gaussian distributions. Unlike point cloud methods that produce sharp boundaries, Gaussian Splatting enables natural reconstruction of contact surfaces and complex interactions. The model further addresses occlusion challenges by aligning multiple hand instances to a canonical Gaussian structure and employs a pre-trained diffusion model for score distillation sampling (SDS), allowing it to accurately reconstruct unseen surfaces, including the backs of objects.

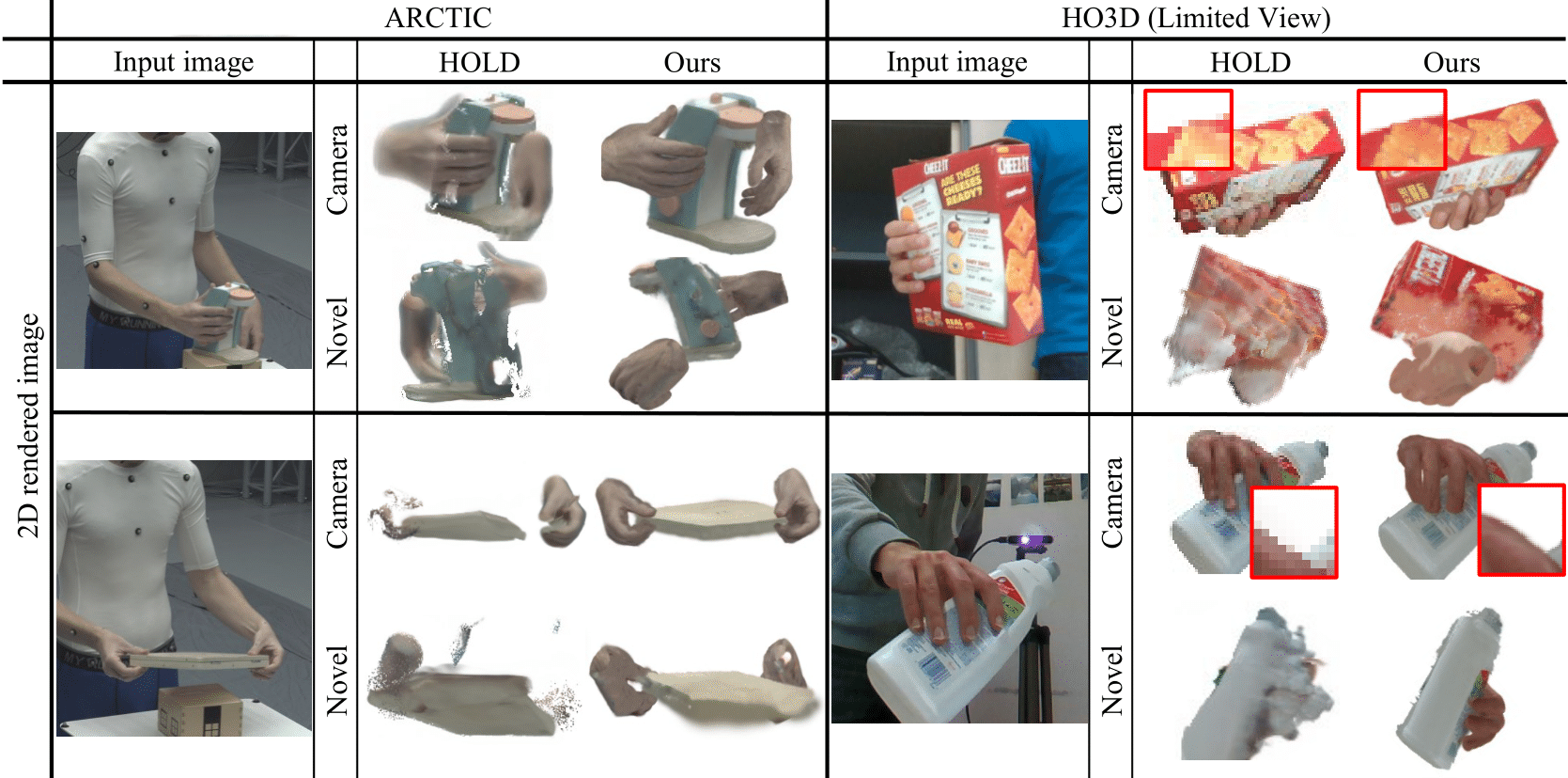

Figure 1. Results of reconstructing hand-object interactions from various viewpoints using the ‘BIGS’ method.

Extensive experiments utilizing international datasets such as ARCTIC and HO3Dv3 demonstrated that BIGS outperforms existing technologies in accurately capturing hand postures, object shapes, contact interactions, and rendering quality. These capabilities hold significant promise for applications in virtual and augmented reality, robotic control, and remote surgical simulations.

This research was conducted with contributions from first author Jeongwan On, along with Kyeonghwan Gwak, Gunyoung Kang, Junuk Cha, Soohyun Hwang, and Hyein Hwang. Professor Baek remarked, “This advancement is expected to facilitate real-time interaction reconstruction in various fields, including VR, AR, robotic control, and remote surgical training.”

The findings have been accepted for presentation at the CVPR 2025 (Conference on Computer Vision and Pattern Recognition), a premier international conference in the field of computer vision, which will be held in the United States from June 11 to 15, 2025. This research was supported by the Ministry of Science and ICT, the National Research Foundation of Korea, and the Institute for Information & Communications Technology Planning & Evaluation (IITP).

Journal Reference

Jeongwan On, Kyeonghwan Gwak, Gunyoung Kang, et al., “BIGS: Bimanual Category-agnostic Interaction Reconstruction from Monocular Videos via 3D Gaussian Splatting,” CVPR 2025, (2025).