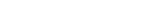

Achieving high reliability in AI systems—such as autonomous vehicles that stay on course even in snowstorms or medical AI that can diagnose cancer from low-resolution images—depends heavily on model robustness. While data augmentation has long been a go-to technique for enhancing this robustness, the specific conditions under which it works best remained unclear—until now.

Professor Sung Whan Yoon and his research team from the Graduate School of Artificial Intelligence at UNIST have developed a mathematical framework that explains exactly when and how data augmentation improves a model’s resilience against unexpected changes in data distribution. This breakthrough paves the way for more systematic and effective design of augmentation strategies, significantly speeding up AI development. Building on this, the team announced that they have rigorously proven the conditions necessary for data augmentation to enhance model robustness.

Deep learning models often struggle when faced with data that slightly differs from what they were trained on, leading to sharp drops in performance. Data augmentation, which involves creating modified versions of training data, helps address this issue. However, choosing the most effective transformations has traditionally been a process of trial and error.

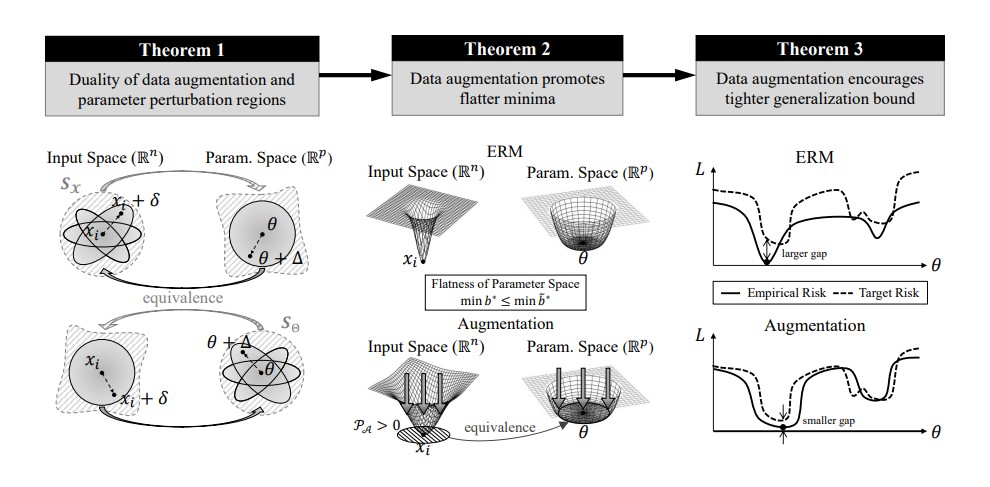

The team identified a specific condition—called Proximal-Support Augmentation (PSA)—which ensures that the augmented data densely covers the space around original samples. This condition, when satisfied, leads to flatter, more stable minima in the model’s loss landscape. Flat minima are known to be associated with greater robustness, making models less sensitive to shifts or attacks.

Figure 1: A conceptual overview of how augmentations can relate to model robustness.

Figure 1: A conceptual overview of how augmentations can relate to model robustness.

Experimental results confirmed that augmentation strategies satisfying the PSA condition outperform others in improving robustness across various benchmarks.

Professor Yoon explained, “This research provides a solid scientific foundation for designing data augmentation methods. It will help build more reliable AI systems in environments where data can change unexpectedly, such as self-driving cars, medical imaging, and manufacturing inspection.”

This work has been accepted for presentation at the 40th Annual AAAI Conference on Artificial Intelligence (AAAI-26)—one of the most prestigious international conferences in the field of AI. Out of 23,680 submissions, only 4,167 papers were accepted (17.6%).

The study was supported by the Ministry of Science and ICT (MSIT), the Institute of Information & Communications Technology Planning & Evaluation (IITP), the Graduate School of Artificial Intelligence at UNIST, the AI Star Fellowship Program at UNIST,, and the National Research Foundation of Korea (NRF).

Journal Reference

Weebum Yoo and Sung Whan Yoon, “Rh Cluster Catalysts with Enhanced Catalytic Activity: The ‘Goldilocks Rh Size’ for Olefin Hydroformylation,” AAAI ’26, (2026).